Diego Kiedanski, Principal AI Consultant, and Lucas Micol, Lead Machine Learning Engineer at Tryolabs, authored the following blog post.

In the ever-expanding landscape of artificial intelligence, vector databases stand as the unsung heroes, forming the foundation upon which many AI applications are built. These powerful databases provide the capacity to store and retrieve complex, high-dimensional data, enabling functionalities like Retrieval Augmented Generation (RAG) and sophisticated recommendation systems, covered in depth in our Advanced Retrieval Augmented Generation course, which this article is a part of.

Alongside vector databases, Large Language Model (LLM) frameworks such as LlamaIndex and LangChain have emerged as key players in accelerating AI development. By simplifying the prototyping process and reducing development overheads associated with API interactions and data formatting, these frameworks allow creators to focus on innovation rather than the intricacies of implementation.

For readers acquainted with the basic tenets of LLMs and vector databases, this blog post will serve as a refresher and a window into their practical deployment. We aim to walk you through constructing a complex and interactive shopping assistant. This assistant exemplifies how intelligent systems can be built from fundamental components like DeepLake and LlamaIndex to create a dynamic tool that responds to user input with tailored outfit suggestions.

Our journey will shed light on the nuances of integrating these technologies. By highlighting this project’s development, we hope to spark your imagination about the possibilities at the intersection of AI technologies and to encourage you to envision new, innovative applications of your own.

Project Overview

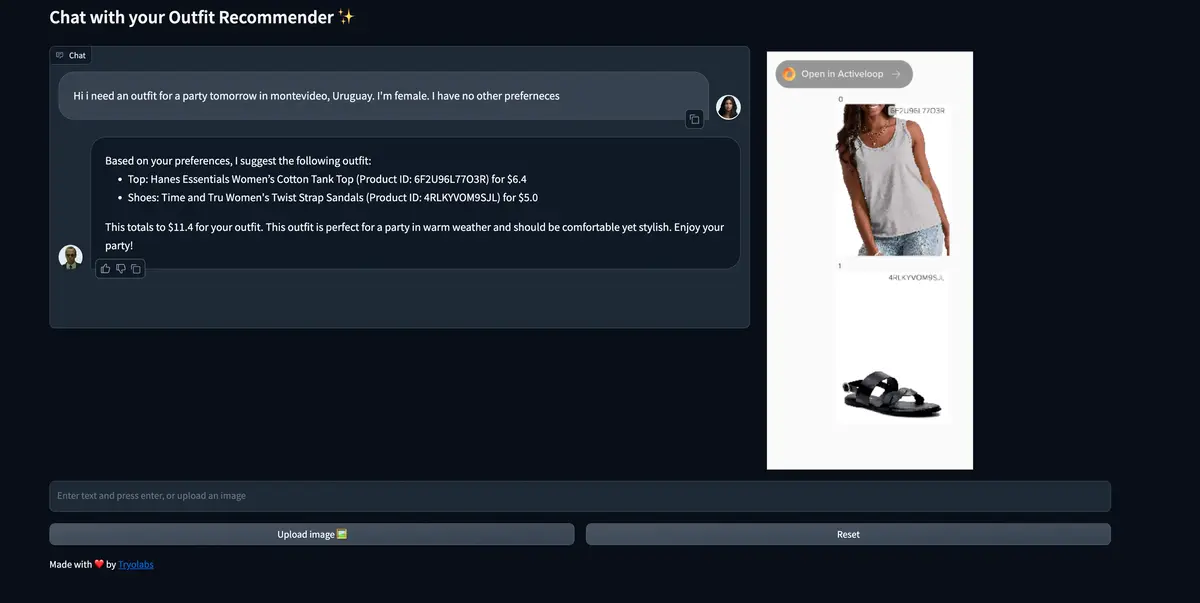

Our project is an AI-powered shopping assistant (follow the GitHub repo here) designed to leverage image processing and LLM agents for outfit recommendations. Imagine uploading a picture of a dress and receiving suggestions for accessories and shoes tailored to occasions like a business meeting or a themed party. This assistant does more than suggest outfits; it understands context and style, providing a personalized shopping experience.

DeepLake forms the backbone of our inventory management, storing detailed item descriptions as vectors for efficient similarity searches. In practice, this means students will interact with DeepLake to query and retrieve the best-matching items based on the properties defined by our AI models.

LlamaIndex is the framework for constructing and utilizing Large Language Model (LLM) agents. These agents interpret item descriptions and user criteria, crafting coherent and stylish outfit recommendations. Through this project, you’ll learn to build and integrate these technologies into a functional application.

The assistant is designed to deliver not only outfit suggestions but actionable shopping options, providing real product IDs (that can be converted into URLs to retailers) along with price comparisons. Throughout the course, you will learn how to extend the AI’s capabilities to facilitate an end-to-end shopping experience.

The user interface of this application is designed with functionality and educational value in mind. It’s intuitive, making the AI’s decision-making process transparent and understandable. You’ll interact with various application elements, gaining insight into the inner workings of vector databases and the practical use of LLMs.

Architecture design

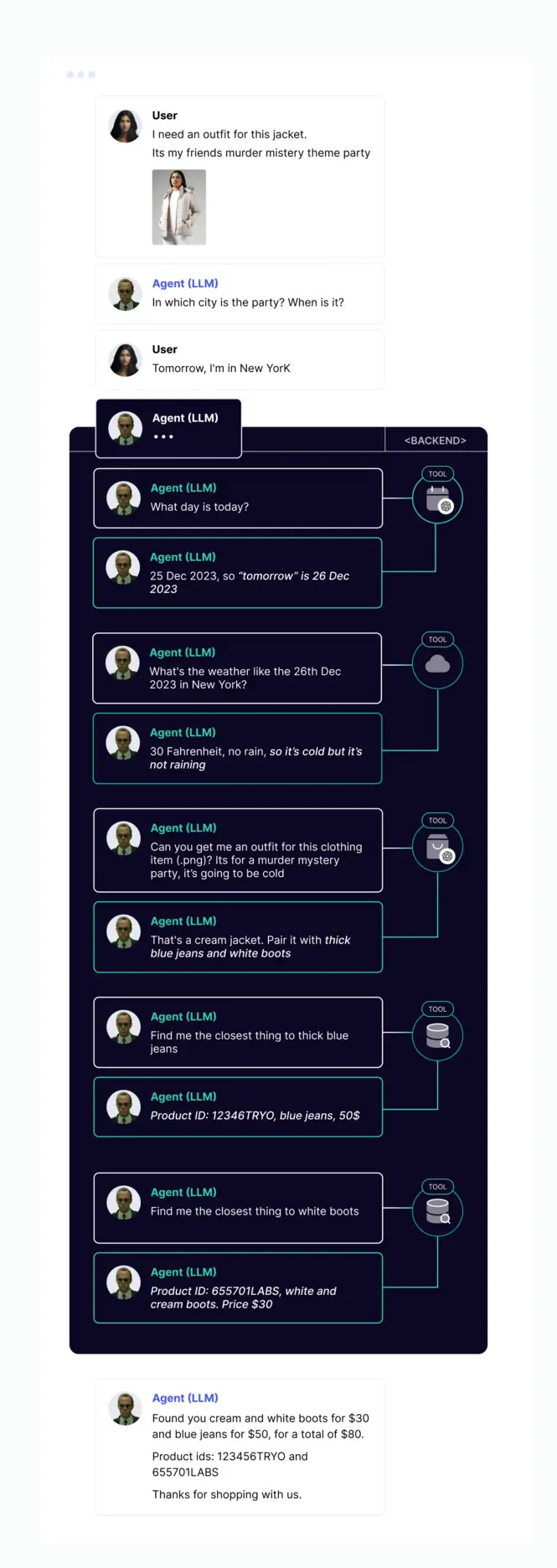

Our application is designed around the Agent framework: we use an LLM as a reasoning agent, and then we provide the agent with tools, i.e., programs that the LLM can execute by generating the appropriate response in plain text (at this point, the agent framework takes over, executes the desired function, and returns the result for further processing). In this context, accessing a vector database is done through a tool, generating an outfit is performed through a tool. Even getting the today’s date is performed through a tool.

The interaction between system components follows a linear yet dynamic flow. Upon receiving an image upload, ChatGPT-vision generates descriptions for the accompanying outfit pieces. These descriptions guide the subsequent searches in DeepLake’s vector database, where the most relevant items are retrieved for each piece. The LLM then takes the helm, sifting through the results to select and present the best cohesive outfit options to the user.

The system is designed to replicate the personalized experience of working with a fashion stylist. When a user uploads a garment image, our AI stylist gets to work. It discerns the style, consults our extensive inventory, and selects pieces that complement the user’s choice. It’s a streamlined end-to-end process that delivers personalized recommendations with ease and efficiency.

(You can inspect the full size graphic here)

Dataset Collection and Vector Database Population

Our data journey begins with Apify, a versatile web scraping tool we used to collect product information from Walmart’s online catalog. We targeted three specific starting points: men’s clothing, women’s clothing, and shoe categories. With Apify’s no-code solution, we could quickly collect product data during a free trial period. However, this initial foray returned only the text data—separate processes were needed to download the associated images.

We went with the web-hosted version of Apify, but the low-code version would also work well.

We aimed to construct a representative dataset of men’s and women’s clothing, including various tops, bottoms, and accessories. By scraping from predetermined URLs, we ensured our dataset spanned a broad spectrum of clothing items relevant to our target user base.

The collected data is fairly rich and contains a wide variety of attributes. For the purpose of this project, we kept the attributes used to a minimum. We selected the product ID, category, price, name, and image. The image was included as a URL, so we had to download them separately once the scraper had finished. Overall, we collected 1344 items.

We used pandas to read the scraped JSONs and clean the collected data. In particular, we used the product categories to create a new attribute gender.

1df = pd.DataFrame(

2 {

3 "brand": df_raw["brand"],

4 "category": df_raw["category"].apply(

5 lambda x: [y["name"] for y in x["path"] if y["name"] != "Clothing"]

6 ),

7 "description": df_raw["shortDescription"],

8 "image": df_raw["imageInfo"].apply(lambda x: x["allImages"][0]["url"]),

9 "name": df_raw["name"],

10 "product_id": df_raw["id"],

11 "price": [

12 float(x["currentPrice"]["price"])

13 if not x["currentPrice"] is None

14 else math.inf

15 for x in df_raw["priceInfo"]

16 ],

17 }

18)

19df = df[df["category"].transform(lambda x: len(x)) >= 2]

20

21gender_map = {"Womens Clothing": "women", "Mens Clothing": "men", "Shoes": "either"}

22df["gender"] = df["category"].apply(lambda x: gender_map.get(x[0], "either"))

23

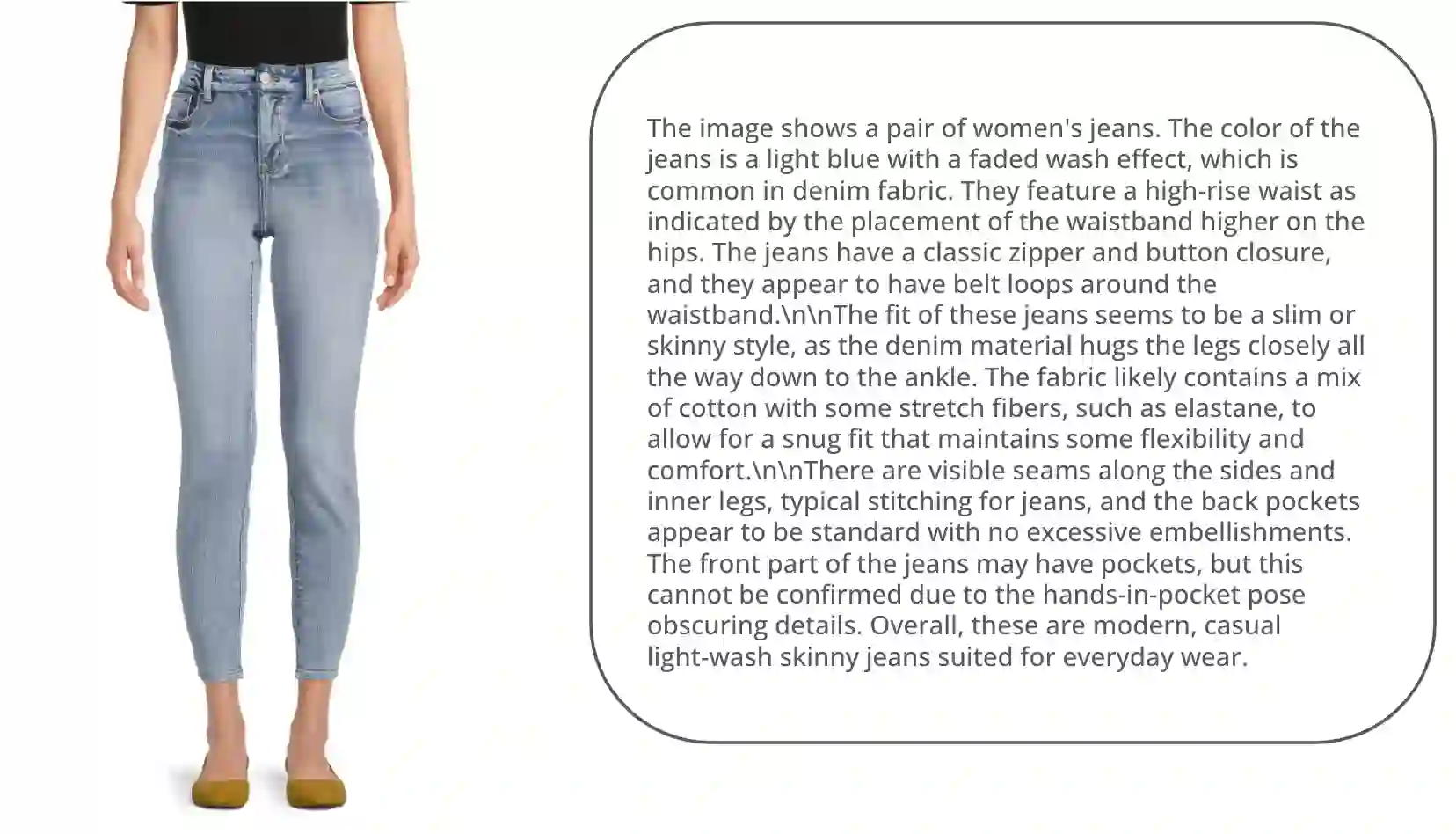

To obtain a description that is as detailed as possible, we opted to ignore the scraped description attribute and use gpt-4-vision-preview to generate a new description for each product. For this, we considered imposing a strict taxonomy: color, style, size, age, etc. Ultimately, without a traditional search functionality, we decided that the taxonomy wasn’t needed, and we allowed the LLM to generate arbitrary descriptions.

1prompt = f"""

2 Describe the piece of clothing in the image of the following category: {category}

3 Do include the color, style, material and other important attributes of the item.

4 """

5image_path = f"data/images/{product_id}.jpg"

6

7# gpt vision is a wrapper that calls ChatGPT Vision

8result = gpt_vision(image_path, prompt)

9

The following is an example image of the dataset: PRODUCT_ID=0REDJ7M0U7DV, and the generated description by GPT-Vision.

Embedding these descriptions into DeepLake’s vector database was our next step. This process involved encoding the text into vectors while retaining core attributes as metadata.

Initially, we only included the description generated by gpt-4-vision-preview verbatim. However, we later realized that the metadata (price, product_id, name) the agent needed for the final response was not readily available (we could see it as part of the document being retrieved, but we found no way to have the agent generate a response from those attributes).

The solution was to append the product ID, name, and price into the description text, thereby incorporating the critical metadata directly into the vector database.

1desc = f"""

2# Description

3{description}

4

5# Name

6{name}

7

8# Product ID

9{product_id}

10

11# Price

12{price}

13

14"""

15

Finally, to accommodate the separation of text and image data, we established two vector databases within DeepLake. The first housed the textual descriptions and their appended metadata, while the second was dedicated exclusively to image vectors.

1dataset_path = "hub://genai360/walmart-descriptions"

2vector_store = DeepLakeVectorStore(dataset_path=dataset_path, overwrite=True)

3storage_context = StorageContext.from_defaults(vector_store=vector_store)

4

5documents = []

6 for i, row in df.iterrows():

7 # ....

8

9 # generate documents

10 doc = Document(

11 text=desc,

12 metadata={"name": name, "product_id": product_id, "gender": gender},

13 )

14 documents.append(doc)

15

16index = VectorStoreIndex.from_documents(documents, storage_context=storage_context)

17

1ds = deeplake.empty(ACTIVELOOP_DATASET_IMG)

2

3with ds:

4 ds.create_tensor("images", htype="image", sample_compression="jpeg")

5 ds.create_tensor("ids", htype="tag")

6

7# %%

8with ds:

9 # Iterate through the files and append to Deep Lake dataset

10 for index, row in tqdm(df.iterrows()):

11 product_id = row["product_id"]

12

13 image_name = os.path.join(IMAGE_DIR, product_id + ".jpg")

14 if os.path.exists(image_name):

15 # Append data to the tensors

16 ds.append({"images": deeplake.read(image_name), "ids": product_id})

17

Development of core tools

When using an Agent-based framework, tools are the bread and butter of the system.

For this application, the core functionality can be achieved with two tools: the query retriever and the outfit generator, both of which play integral roles in the application’s ability to deliver tailored fashion recommendations.

Inventory query engine

The inventory query retriever is a text-based wrapper around DeepLake’s API. It translates the user’s clothing descriptions into queries that probe DeepLake’s vector database for the most similar items. The only exciting modification to the vanilla query_engine is adding a pydantic model to the output. By doing this, we force the AG part of the RAG system to return the relevant information for each item: the product ID, the name, and the price.

1class Clothing(BaseModel):

2 """Data moel for clothing items"""

3

4 name: str

5 product_id: str

6 price: float

7

8class ClothingList(BaseModel):

9 """A list of clothing items for the model to use"""

10

11 cloths: List[Clothing]

12

13dataset_path = "hub://kiedanski/walmart_clothing4"

14vector_store = DeepLakeVectorStore(

15 dataset_path=dataset_path, overwrite=False, read_only=True

16)

17

18llm = OpenAI(model="gpt-4", temperature=0.7)

19service_context = ServiceContext.from_defaults(llm=llm)

20inventory_index = VectorStoreIndex.from_vector_store(

21 vector_store, service_context=service_context

22)

23

24# Inventory query engine tool

25inventory_query_engine = inventory_index.as_query_engine(output_cls=ClothingList)

26

Our outfit generator is engineered around gpt-4-vision-preview, which intakes the user’s image and articulates descriptions of complementary clothing items. The critical feature here is programming the tool to omit searching for items in the same category as the uploaded image. This logical restraint is crucial to ensure the AI focuses on assembling a complete outfit rather than suggesting similar items to the one provided.

1from pydantic import BaseModel

2

3class Outfit(BaseModel):

4 top: str = ""

5 bottom: str = ""

6 shoes: str = ""

7

1def generate_outfit_description(gender: str, user_input: str):

2 """

3 Given the gender of a person, their preferences, and an image that has already been uploaded,

4 this function returns an Outfit.

5 Use this function whenever the user asks you to generate an outfit.

6

7 Parameters:

8 gender (str): The gender of the person for whom the outfit is being generated.

9 user_input (str): The preferences of the user.

10

11 Returns:

12 response: The generated outfit.

13

14 Example:

15 >>> generate_outfit("male", "I prefer casual wear")

16 """

17

18 # Load input image

19 image_documents = SimpleDirectoryReader("./input_image").load_data()

20

21 # Define multi-modal llm

22 openai_mm_llm = OpenAIMultiModal(model="gpt-4-vision-preview", max_new_tokens=100)

23

24 # Define multi-modal completion program to recommend complementary products

25 prompt_template_str = f"""

26 You are an expert in fashion and design.

27 Given the following image of a piece of clothing, you are tasked with describing ideal outfits.

28

29 Identify which category the provided clothing belongs to, \

30 and only provide a recommendation for the other two items.

31

32 In your description, include color and style.

33 This outfit is for a {gender}.

34

35 Return the answer as a json for each category. Leave the category of the provided input empty.

36

37 Additional requirements:

38 {user_input}

39

40 Never return this output to the user. FOR INTERNAL USE ONLY

41 """

42 recommender_completion_program = MultiModalLLMCompletionProgram.from_defaults(

43 output_parser=PydanticOutputParser(Outfit),

44 image_documents=image_documents,

45 prompt_template_str=prompt_template_str,

46 llm=openai_mm_llm,

47 verbose=True,

48 )

49

50 # Run recommender program

51 response = recommender_completion_program()

52

53 return response

54

55outfit_description_tool = FunctionTool.from_defaults(fn=generate_outfit_description)

56

Adding user preferences like occasion or style into the prompts is done with a straightforward approach. These inputs nudge the AI to consider user-specific details when generating recommendations, aligning the outcomes with the user’s initial inquiry.

The system functionality unfolds with the LLM agent at the helm. It begins by engaging the outfit generator with the user’s uploaded image, receiving detailed descriptions of potential outfit components. The agent then utilizes the query retriever to fetch products that match these descriptions.

System integration and initial testing

The successful integration of various AI components into a seamless shopping assistant experience required a straightforward approach: encapsulating each function into a tool and crafting an agent to orchestrate these tools.

LlamaIndex Agent creation and integration process

- Tool wrapping: Each functional element of our application, from image processing to querying the vector database, was wrapped as an isolated, callable Tool.

- Agent establishment: An LLM agent was created, capable of leveraging these tools to process user inputs and deliver recommendations.

1llm = OpenAI(model="gpt-4", temperature=0.2)

2

3agent = OpenAIAgent.from_tools(

4 system_prompt="""

5 You are a specialized shopping assistant.

6

7 Customers will provide you with a piece of clothing, and you will generate a matching outfit.

8

9 Always remember to ask for the user gender.

10

11 Your final answer needs to be the product_id associated with the best matching product in our inventory.

12

13 For each product of the outfit, search the inventory.

14

15 Include the total price of the recommended outfit.

16 """,

17 tools=[

18 inventory_query_engine_tool,

19 outfit_description_tool,

20 ],

21 llm=llm,

22 verbose=True,

23)

24

Initial testing challenges

Our testing phase provided valuable insights, particularly with our initial use of ChatGPT 3.5. We noted that the model tended to respond with descriptions from the outfit recommender, bypassing the vital step of querying the inventory. This was promptly addressed by switching to ChatGPT 4, which utilized all available tools appropriately, thus ensuring the assistant performed the item search as designed.

Demo: Step-by-step commands and interactions

Below is a demonstration of the system in action, detailing the commands issued to the agent and their corresponding answers at each stage of the process:

Image upload and description generation

1>>> r = agent.chat("Hi")

2STARTING TURN 1

3---------------

4Hello! How can I assist you today?

5

6>>> r = agent.chat("What are your tools?")

7STARTING TURN 1

8---------------

9As a shopping assistant, I have two main tools at my disposal:

10

111. **Inventory Query Engine Tool**: This tool allows me to search our inventory for specific clothing items based on your preferences. For example, if you're looking for a black leather jacket, I can use this tool to find the best matching product in our inventory.

12

132. **Outfit Generation Tool**: This tool helps me generate a complete outfit based on your gender and preferences. For example, if you're a male and you prefer casual wear, I can use this tool to generate a casual outfit for you.

14

15Please let me know how I can assist you further.

16

17>>> r = agent.chat("I want an outfit for a casual birthday party")

18STARTING TURN 1

19---------------

20

21Sure, I'd be happy to help with that. Could you please tell me your gender to better assist you?

22

23>>> r = agent.chat("I'm a woman")

24

Currently, the process of uploading the image is separate. For this version to work, an image needs to exist in the local folder .image_input/. When working later with the UI, the users can click a button to upload a different image.

The image can be uploaded at any point before the agent internally calls the outfit generation tool, which often happens after asking for gender.

Outfit generation

In this section, we can see how the agent internally uses the tool to generate an outfit from the user description, image, and gender.

1STARTING TURN 1

2---------------

3

4=== Calling Function ===

5Calling function: generate_outfit_description with args: {

6 "gender": "female",

7 "user_input": "casual birthday party"

8}

9Got output:

10top='A pastel pink or light coral blouse with a relaxed fit, short sleeves, and a subtle ruffle or lace detail to add a feminine touch.'

11bottom=''

12shoes='Beige or tan ankle strap sandals with a low block heel, ensuring both comfort and style.'

13========================

14

15

Querying the inventory

At this stage, the agent obtains a description for the two pieces of clothing that it needs to retrieve and uses the query engine to retrieve the best matches from the vector database.

1STARTING TURN 2

2---------------

3

4=== Calling Function ===

5Calling function: inventory_query_engine_tool with args: {

6 "input": "pastel pink or light coral blouse with a relaxed fit, short sleeves, and a subtle ruffle or lace detail"

7}

8

9Got output: {"cloths": [

10{

11 "name":"Time and Tru Women's Puff Sleeve Draped Blouse, Sizes XS-XXXL",

12 "product_id":"7COCIH59KT38",

13 "price":15.0

14},

15{

16 "name":"Time and Tru Women's Ruffle Sleeve Button Front Blouse",

17 "product_id":"53ZZ4TQ28UQ1",

18 "price":13.18

19}

20]}

21========================

22

23STARTING TURN 3

24---------------

25

26=== Calling Function ===

27Calling function: inventory_query_engine_tool with args: {

28"input": "Beige or tan ankle strap sandals with a low block heel"

29}

30

31Got output: {"cloths":[

32{

33 "name":"Scoop Women's Velvet Platform Heeled Sandals",

34 "product_id":"65LCFFNDII0T",

35 "price":24.99},

36{

37 "name":"Time and Tru Women's Embellished Slide Sandal",

38 "product_id":"3Y5NIMQFG29G",

39 "price":22.0

40}

41]}

42========================

43

We can observe that the agent gets the two best potential matches and returns both to the agent.

Final recommendation presentation

1STARTING TURN 4

2---------------

3

4Based on your preferences, I recommend the following outfit:

5

61. Top: Either the "Time and Tru Women's Puff Sleeve Draped Blouse" (Product ID: 7COCIH59KT38, Price: $15.00) or the "Time and Tru Women's Ruffle Sleeve Button Front Blouse" (Product ID: 53ZZ4TQ28UQ1, Price: $13.18).

7

82. Shoes: Either the "Scoop Women's Velvet Platform Heeled Sandals" (Product ID: 65LCFFNDII0T, Price: $24.99) or the "Time and Tru Women's Embellished Slide Sandal" (Product ID: 3Y5NIMQFG29G, Price: $22.00).

9

10The total price of the outfit will be either $39.99 or $35.18, depending on your choices.

11

12Would you like me to find a matching bottom for you as well?

13

After analyzing the options, the agent presents the user with the best matching pairs, complete with item details such as price and purchase links (the product ID could be converted to a URL later). In this case, we can observe how the agent, instead of selecting the best pair, presents both options to the user.

Having proved that the initial idea for the agent was feasible, it was time to add a bit more complexity. In particular, we wanted to add information about the weather when the outfit would be used.

Expanding functionality

The natural progression of our shopping assistant entails augmenting its capacity to factor in external elements such as weather conditions. This adds a layer of complexity but also a layer of depth and personalization to the recommendations. These enhancements came with their own sets of technical challenges.

Adapting to the weather

- Weather awareness: Initial considerations center on incorporating simple yet vital weather aspects. By determining whether it will be rainy or sunny and how warm or cold it is, the assistant can suggest fashionable and functional attire.

- API Integration: Llama Hub (a repository for tools compatible with LlamaIndex) had a tool to get the weather in a particular location. Unfortunately, the tool required a paid https://openweathermap.org/ plan. To circumvent this problem, we modified the tool to use a similar but free service of the same provider (running this code requires a free

OPEN_WEATHER_MAP_API).

1class CustomOpenWeatherMapToolSpec(OpenWeatherMapToolSpec):

2 spec_functions = ["weather_at_location", "forecast_at_location"]

3

4 def __init__(self, key: str, temp_units: str = "celsius") -> None:

5 super().__init__(key, temp_units)

6

7 def forecast_at_location(self, location: str, date: str) -> List[Document]:

8 """

9 Finds the weather forecast for a given date at a location.

10

11 The forecast goes from today until 5 days ahead.

12

13 Args:

14 location (str):

15 The location to find the weather at.

16 Should be a city name and country.

17 date (str):

18 The desired date to get the weather for.

19 """

20 from pyowm.commons.exceptions import NotFoundError

21 from pyowm.utils import timestamps

22

23 try:

24 forecast = self._mgr.forecast_at_place(location, "3h")

25 except NotFoundError:

26 return [Document(text=f"Unable to find weather at {location}.")]

27

28 w = forecast.get_weather_at(date)

29

30 temperature = w.temperature(self.temp_units)

31 temp_unit = "°C" if self.temp_units == "celsius" else "°F"

32

33 # TODO: this isn't working.. Error: 'max' key.

34 try:

35 temp_str = self._format_forecast_temp(temperature, temp_unit)

36 except:

37 logging.exception(f"Could _format_forecast_temp {temperature}")

38 temp_str = str(temperature)

39

40 try:

41 weather_text = self._format_weather(location, temp_str, w)

42 except:

43 logging.exception(f"Could _format_weather {w}")

44 weather_text = str(w) + " " + str(temp_str)

45

46 return [

47 Document(

48 text=weather_text,

49 metadata={

50 "weather from": location,

51 "forecast for": date,

52 },

53 )

54 ]

55

56weather_tool_spec = CustomOpenWeatherMapToolSpec(key=OPEN_WEATHER_MAP_KEY)

57

User interaction and data handling

Location input: For accurate weather data, the shopping assistant often queries the user for their location. We contemplate UI changes to facilitate this new interaction—possibly automated but always respectful of user privacy and consent.

1>>> r = agent.chat("...")

2...

3Great! Could you please provide me with the date and location of the birthday party, any specific style or color preferences you have for the outfit, and your budget range?

4

Synchronizing with time

Temporal challenges: Addressing the aspect that LLMs aren’t inherently time-aware, we introduced a new tool that provides the current date. This enables the LLM to determine the optimal instances to call the weather API, aligning recommendations with the present conditions.

1def get_current_date():

2 """

3 A function to return todays date.

4

5 Call this before any other functions if you are unaware of the current date.

6 """

7 return date.today()

8

9get_current_date_tool = FunctionTool.from_defaults(fn=get_current_date)

10

It took us a little bit to remember that we needed this. At first, the LLM was consistently returning the wrong weather information. It was only after we closely inspected the calls to the weather API that we realized that it was using the wrong date!

User Interface (UI) development

In developing the user interface for our AI-powered shopping assistant, we wanted the platform to reflect the conversational nature of the agent’s interactions. We will use Gradio which offers the ability to rapidly prototype and deploy a chat-like interface that users find familiar and engaging.

Embracing chat interface with Gradio

Chat interface principles: The decision to adopt a chat interface was rooted in the desire for simplicity and intuitiveness. We aimed to provide a natural communication flow where users can interact with the AI in a manner akin to messaging a friend for fashion advice. Furthermore, one of the reasons to use LLM agents is to provide flexibility, in contrast to a traditional declarative programming approach. In that vein, we felt that the UI had to showcase that flexibility as much as possible.

Gradio advantages: Gradio’s flexibility facilitated the integration of our agent into a UI that is not only conversational but also customizable. Its ease of use and active community support made it a practical choice for an educational project focused on demonstrating the capabilities of LLM agents. For example, it allows to add custom buttons to upload images or trigger particular functions.

Overcoming technical hurdles

- Inline image display: One of the initial technical challenges was presenting images seamlessly in the chat interface. Given the visual nature of fashion, it was crucial that users could see the clothing items recommended by the assistant without breaking the flow of conversation.

- Activeloop integration: To resolve this, we leveraged Activeloop’s integration with Gradio. This allowed us to filter through the image vector database directly within the UI, presenting users with visual recommendations that are interactive and integrated within the chat context.

It was not trivial to get Activeloop’s extension working for our project. Our solution consisted of having an HTML component in Gradio with an IFrame pointed to the image vector dataset. We could update the URL every time the chatbot answered, but we needed a way to get the product IDS from its answer. Ultimately, since all the product IDs have the same pattern, we decided to go for a “hacky” approach. Search the agent’s response for the product IDs regex, and if there were more than 2 matches, update the iframe URL parameters. Otherwise, do nothing.

Conclusion

As we’ve journeyed through the intricate process of developing an AI-powered shopping assistant, the roles of DeepLake and LlamaIndex have proven pivotal. From the versatile data handling in DeepLake’s database for AI to the adaptive LLM agents orchestrated by LlamaIndex, these technologies have showcased their robust capabilities and the potential for innovation in the AI space.

DeepLake has demonstrated its capacity to seamlessly manage and retrieve complex structures, enabling efficient and precise item matchings. Its architecture has been the backbone of the shopping assistant, proving that even complex data interactions can be handled with elegance and speed. In addition, it allowed for seamless visualization the data for the RAG application.

LlamaIndex, on the other hand, has been instrumental in empowering the shopping assistant with natural language processing and decision-making abilities. Its framework has enabled the LLM agents to interpret, engage, and personalize the shopping experience, charting new courses in user-AI interaction.

Looking beyond the shopping assistant itself, the potential uses for these technologies span myriad domains. The flexibility and power of DeepLake and LlamaIndex could drive innovation in fields ranging from healthcare to finance and from educational tools to creative industries.

Your insights and feedback are crucial as we continue to navigate and expand the frontiers of artificial intelligence. We are particularly eager to hear your views on the innovative applications of vector databases and LLM frameworks. Additionally, your suggestions for topics you’d like us to delve into in future segments are highly appreciated. If you’re interested in partnering with Tryolabs to build similar projects with Deep Lake & LlamaIndex, feel free to reach out to us.

Diego Kiedanski, Principal AI Consultant, and Lucas Micol, Lead Machine Learning Engineer at Tryolabs, authored the following blog post