2025 will be the year of driving tangible ROI with GenAI. Knowledge Agents over multi-modal data are the key to achieving this.

Why We Built This

We’ve spent this past year talking to a lot of companies (mainly Fortune 500), noticing a distinct pattern: companies are prepared to compromise on latency, but not accuracy. In fact, accuracy is non-negotiable and directly linked to the ability of the company to drive actual revenue or efficiency gains with the help of GenAI (thus justifying the overall expense on additional infra + models).

Knowledge workers spend a lot of time conducting repetitive, highly manual searches: from nurses compiling patient health data for insurance claim review and paralegals doing patent search for patent filing to research associates evaluating drug compound hypotheses across newly published papers on PubMed conduct.

Conservative estimates suggest that manual searches at enterprises result in around 21.3%-25% of productivity is wasted. That’s about $20K annually per worker - with a mid-size firm of 1,000 employees might therefore bleed well over $20 million per year to inefficiencies in search. Think of it this way: every time your teams go hunting for lost files, it’s like you’re paying them to play “hide-and-seek” with your data—and nobody really wins.

Today, we’re thrilled to introduce a solution that fixes that - highly accurate, thoughtful answers generated by an AI Knowledge Agent, across internal and external multi-modal data.

But, OpenAI Deep Research?

Both Deep Lake and OpenAI’s Deep Research aim to enhance AI-driven research and retrieval, but they take different approaches. OpenAI’s Deep Research acts as an AI-powered assistant that autonomously searches the web, while Deep Lake provides an enterprise-grade, multi-modal AI retrieval system that works seamlessly with both public and private data—delivering comparable, if not more accurate, results with greater flexibility in types of data you can ask questions over and …

1. Connect Your Private and Public Data

A key distinction is that Deep Lake isn’t limited to public data—it is designed for enterprises that need AI-powered retrieval across proprietary, sensitive, and high-value datasets. In our own research, about 63% of companies struggle unifying their data and connecting it to AI. Deep Lake can be immediately deployed in your S3 or Azure (available at respective marketplaces), enabling you to instantly ask questions over it.

It’s as easy as:

- While Deep Research searches only publicly available sources, Deep Lake allows organizations to securely store and retrieve insights from their own internal research, reports, IP, and confidential data.

- This is crucial for industries like biotech, MedTech, finance, and legal, where organizations rely on proprietary information rather than open-web searches.

- Enterprise-grade security (including RBAC, SOC 2 Type II compliance, pen-testing, etc.) ensures that sensitive data remains compliant and protected.

2. Multi-Modal Retrieval with Visual Language Models

Deep Lake is built from the ground up for multi-modal AI retrieval, making it superior for tasks involving diverse data types. While Deep Research primarily deals with text-based queries (with some ability to process images and files), Deep Lake supports:

- Seamless cross-modal queries across text, image, video, audio, and structured metadata.

- Fine-tuned VLMs specifically optimized for multi-modal retrieval, ensuring that even highly complex, mixed-data queries return accurate and relevant results.

- Real-time hybrid search, combining vector-based, keyword-based, and structured search techniques to enhance precision.

3. Comparable or Superior Accuracy in Retrieval

Deep Lake’s advanced retrieval architecture ensures that it achieves results that are as accurate as, or even more accurate than, OpenAI’s Deep Research. Unlike Deep Research, which primarily relies on test-time reasoning and chain-of-thought processes, Deep Lake leverages:

- Deep Memory, which enhances retrieval accuracy by dynamically learning from past searches, personalizing search results to your use case, picking up industry jargons and user preferences. This ensures gold-standard performance in domain-specific use cases.

- Multi-modal retrieval techniques, allowing for seamless cross-referencing of data between text, images, video, audio, and structured data across clouds and local storage.

4. BYOM: Bring-Your-Own-Model

Deep Lake isn’t tied to a sole model provider, and offers complete flexibility in choosing your underlying AI model.

You can plug in any model of your choice, including state-of-the-art open-source models, fine-tuned domain-specific LLMs and SLMs, or other close-source leaders like Anthropic Claude and Google Gemini.

5. Sub-Second Queries with Cost-Optimized Performance

A query in natural language gets automatically translated into a set of sequel queries. Under the hood, we also figure out what additional subsets of data to query across to gather full evidence for a highly accurate reply.

Deep Lake’s index-on-the-lake technology enables sub-second queries directly from object storage, providing a 10× cost efficiency advantage over traditional in-memory systems. This results in:

- Sub-second latency, even across massive datasets (100M+ records).

- No need for expensive caching—queries are optimized for real-time retrieval while keeping storage costs low.

- Scalable across cloud environments, making it suitable for AI-native applications requiring rapid, cost-effective AI search.

How it Works

Deep Lake focuses on the building blocks of data storage and retrieval, to enable you to store the data and retrieve it in the best possible way for AI workflows.

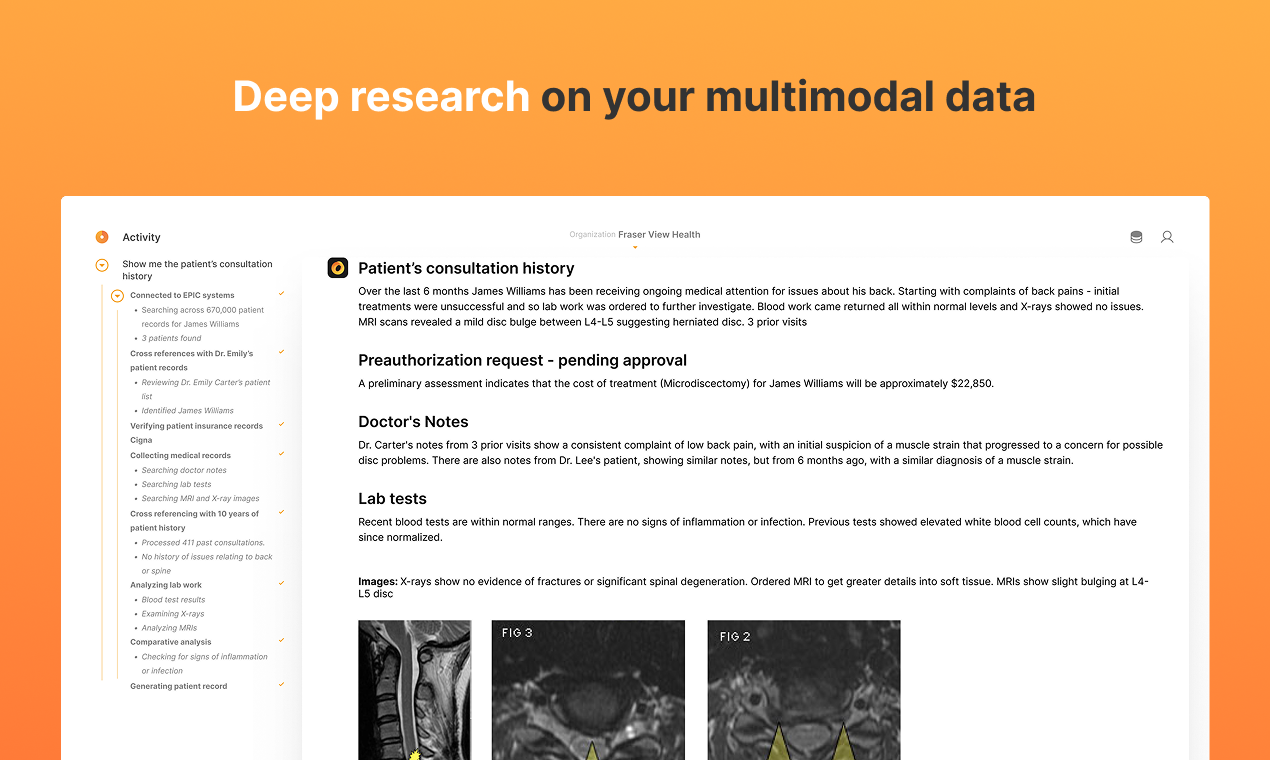

After connecting to your data and indexing it, Deep Lake’s Knowledge Agent can plan a set of research tasks and execute multi-step queries across various datasets and modalities - understanding what data it needs to answer the question (or, importantly, if it even has the evidence to answer the question). The agent also leverages advanced retrieval techniques like MaxSim for accurate retrieval based on both visual and textual context - and includes that information as references alongside citations from text data across up to billions of rows of data.

What Type of Questions Can I Ask?

Deep Lake is available right now to everyone in your team - with no limit to the number of questions you can ask or the data size and modality you can query across.

Here’s a few questions you can ask:

Synthesize reports across patient chart data, lab tests, MRIs.

Find mentions of complex terms & concepts and draw connections

The below is taken from Marcel Proust’s novel In Search of Lost Time (French: À la recherche du temps perdu) - one of the longest books ever written (at more than 1150 pages in PDF format).

Query Across Research Findings

1Question: What's the DeepSeek Performance across Reasoning Tasks?

2

The answers will contain information both from the paper text and figures.

Known Limitations

As with any system, there are some limits. In our case, Deep Lake Knowledge Agent is tuned to be more analytical, cautious with responses, and performs best when you don’t need an immediate, simple response, since it shines the most with niche queries that require deeper thought.

We are opening up the system for public preview to improve the product based on your feedback. We are also working on a router that alternates between 'fast’ and 'slow’ modes of thinking based on the complexity of the query.

How Flagship Pioneering Makes Big Leaps in Biotech with Deep Lake

Flagship Pioneering is a biotechnology company that invents platforms and builds companies focused on making bigger leaps in human health and sustainability. The company has partnered with Activeloop to enhance its RAG capabilities in scientific research. In this collaboration, Flagship’s Pioneering Intelligence team and Activeloop have developed a system using Activeloop’s Deep Lake’s Knowledge Agent. Flagship was able to search across the entire world’s scientific research, retrieving multi‐modal biomedical data by about 18% accurately over traditional vector or keyword‐based searches. Notably, they have been able to capture information from specific figures that hasn’t been mentioned anywhere in the article texts, further enhancing their research capabilities.

Fast, Accurate AI Search 40M+ Papers Across Data Modalities & Clouds for Fortune 500 MedTech company

Highly manual, repetitive search tasks necessary in MedTech scientific discovery and compliance workflows have been automated with Deep Lake, reducing research timelines that formerly spanned months have been compressed to days.

Get started today via chat.activeloop.ai. The first week is free - with the pricing starting at $99 / per seat (and scaling depending on your data needs).