How to monitor models with Activeloop & manot

Well done. Your model is one of the few that finally made it into production. But, in the words of Lori Greiner from Shark Tank,

Why? Because apart from Words of Affirmation and Acts of Service, your models require… monitoring (some may say this stands for Quality Time).

Why should you monitor your ML model performance?

If you’re still scratching your head, these are some reasons why models require monitoring.

- As your data evolves, your model performance may decay (as some companies found out the hard way by over-relying on data collected during pandemic lockdowns).

- After deploying a model to production, it’s crucial to continue monitoring its performance to ensure that it’s functioning correctly and delivering accurate results.

- This model monitoring process helps to identify and resolve any issues with the model or the system that serves it before they cause a negative business impact. Like losing $440M because picking up wrong cues from redditors’ sentiment kind of negative.

- Finally, you want to continuously monitor your models as it helps to maintain transparency in the prediction process for all stakeholders involved, as well as serves as a path for continuous improvement.

Our new integration with manot, an ML model monitoring tool

Today, we’re thrilled to announce that you can monitor the performance of your models trained on your Deep Lake datasets, thanks to the partnership between Activeloop and manot. manot is a computer vision model observability platform that enables AI teams to improve their feedback loop by gaining insights into where their model may be performing poorly. This integration will bring tons of value across various applications, such as surveillance ML, autonomous vehicles & robotics, or image search. Before we present it in practice, let’s first review what challenges you might encounter while monitoring ML systems in production.

Why computer vision models fail in the production environment

As mentioned previously, the fact that your computer vision model performs well on the test set, it doesn’t mean it will do so on the real-world data. Why may a computer vision model fail in production?

Data Shift: Naturally, the real-world data can differ from the limited training data (i.e., its distribution may differ). If the distribution of data in production varies from the distribution of training data, the model’s accuracy could worsen. This typically occurs when data is trained on a different geographic location or at significantly different time of the year (e.g. buyer behavior during Black Friday is different from off-peak buyer behavior). When the distribution of data changes between the training phase and the production phase, we call it a covariate shift or distribution shift.

Data Drift: In contrast to data shift, data drift occurs when the distribution of data changes over time in the production phase (or a product’s popularity fading away over time). What causes data drift? A couple of factors, such as changes in the underlying process being modeled (e.g., switch of product packaging when doing ML in manufacturing), changes in the environment (buyer behavior due to macroeconomic factors), or changes in the data collection process (upgrading cameras that are used for facial recognition model training).

Overfitting: Overfitting typically occurs your model’s trained too closely on the training data. In effect, the model will not behave well when it meets real-world data - since there can be huge gaps between the real world and the model’s training dataset. As a result, the model in production does not generalize well and performs poorly.

Poor hyperparameter tuning: Poor hyperparameter tuning may cause the model perform unexpectedly in production because the model might not perform well the specific use case at hand.

Hardware limitations: The computational power & memory available in production may differ from those used during model development and testing, leading to performance bottlenecks. A typical example of this is training the model centrally in the cloud and then expecting it to perform well on edge devices (Raspberry Pis? More like raspberry pies…)

Lack of monitoring & maintenance: Without proper monitoring practices, the model’s accuracy will decay after deployment. This can manifest itself in outdated models, unpatched vulnerabilities, and more. This is why it is vital to incorporate model performance monitoring tools to detect the scenarios that the model may not be sufficiently exposed to during the training phase.

Model monitoring challenges at the data input level

There are several challenges that teams may face when monitoring ML models at the data input level. These include:

Data quality: Providing the quality of the input data is crucial for the model’s accuracy. As it has been proven time an again, poor-quality data leads to incorrect predictions and inaccurate results.

Lack of single source of truth for ML data: You may be using both private and public data for your training purposes, or storing your data locally or in different clouds. These disparate and unreliable data sources may lead to including outdated or otherwise faulty samples in your dataset. Thus the flexibility to handle a wide variety of data types and formats and the ability to integrate data from multiple sources is integral to a project’s success. Deep Lake recently solved an issue like this for Earthshot Labs, who managed to build a tree segmentation app and 5x their speed with 4x fewer resources required.

Unclear or evolving data schema: Your data schema evolves as your data structure requirements were not agreed upon before the project started, so now you’re comparing apples to oranges while evaluating model performance. Alternatively, the data science team doesn’t use a unified data format for their data, which complicates all things downstream (if only there were a unified data format better than legacy stuff like HDF5, built specifically for ML? oh wait, there is!).

Opaque or non-existent metadata for your production data workflow: you have done a gazillion experiments but can’t tell one from another. Nuff said. Start ensuring better data lineage.

Data preprocessing issues: Preprocessing the input data to be in the correct format for the model is a crucial step that can introduce errors and bias if not performed correctly.

Data heterogeneity: The input data can be highly heterogeneous, coming from different sources with different formats, making it tough to ensure the data is consistent and suitable for use by the model.

Data volume: The sheer volume of input data can make it challenging to monitor and detect issues with the data in real-time, unless you’re connecting your data to ML models with a performant data streaming setup.

Data privacy: Protecting sensitive data is a critical concern when monitoring data inputs (especially when doing ML in medicine, or applying machine learning in safety & security), as this information could contain personally identifiable or confidential information.

In sum, to effectively monitor data inputs, organizations must have robust data management processes in place, including data quality checks, preprocessing and validation, and privacy protection measures. They must also have the necessary technical infrastructure and expertise to handle the volume and complexity of the data.

Deep Lake & Model Performance Monitoring

Model performance monitoring involves evaluating how well a machine learning model is able to make accurate predictions based on new data (for analyzing visual data, it could be images or video). Model performance monitoring is especially important when working with unstructured data to identify outlier scenarios that the model has not been sufficiently exposed to during training, which can cause its accuracy to decrease.

Deep Lake can be utilized in model performance monitoring as well. As one of Activeloop long-time users, Arseniy Gorin, Head of the ML team at Ubenwa.ai mentioned in our chat recently:

“It is important to be able to launch and also kill a training process immediately because if something goes wrong, data scientists can stop it. So that’s why you start tracking it immediately. You look at the losses, and if something does go wrong, you can just kill it, inspect the data if that’s the bottleneck, fix it, and relaunch it again as opposed to, oh, I’m waiting, whilst we’re paying for this training process that is already doomed”

As a result, you can detect problems even before deploying the model in the real world, allowing teams to catch data drifts and deploy a model with high overall accuracy.

Computer vision model monitoring with manot

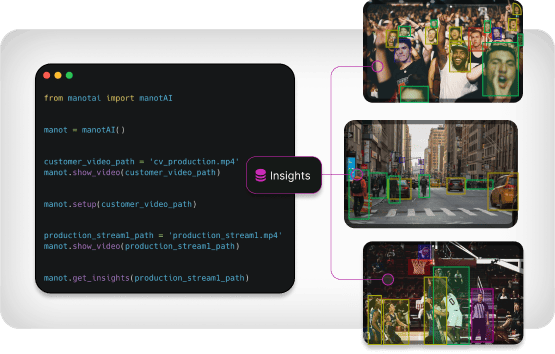

What is manot? manot is a platform for observing computer vision models. It assesses the performance of models during both pre- and post-production development. The system identifies outliers the model encounters and suggests similar data samples from sources such as Deep Lake datasets. When added to the training dataset, these improve the model’s accuracy. In effect, computer vision teams streamline their training data improvement process, while saving time and resources.

Demo of manot and Activeloop’s Integration

We start off by installing manot and Deep Lake. Once installed, import all of the necessary dependencies as shown in the code below and define your Deep Lake and manot tokens.

1!pip3 install -U manot

2!pip3 install -U deeplake

3!pip3 install --upgrade jupyter, ipywidgets

4

5from IPython.display import clear_output; clear_output()

6

7import deeplake

8from manot import manotAI

9import glob, os, tqdm

10

11#Tokens initializations

12os.environ['DEEPLAKE_TOKEN'] = '...'

13os.environ['MANOT_TOKEN'] = '...'

14

15deeplake_token = os.environ['DEEPLAKE_TOKEN']

16manot_token = os.environ['MANOT_TOKEN']

17

Next, we can move on to creating our Deep Lake dataset and defining our tensors.

1ds = deeplake.empty('hub://manot/manot-activeloop-demo', overwrite=True, token=deeplake_token)

2with ds:

3 ds.create_tensor('images', htype='image', sample_compression='jpg')

4 ds.create_tensor('bbox', htype='bbox')

5 ds.create_tensor('labels')

6 ds.create_tensor('predicted_bbox', htype='bbox')

7 ds.create_tensor('predicted_labels')

8 ds.create_tensor('predicted_score')

9

Once our tensors are ready, we have set up our dataset, we need to populate our tensors with the necessary data.

1with ds:

2 for image_name in tqdm.tqdm(images_list):

3 file_name = image_name.rsplit('/')[-1].rsplit('.')[0]

4

5 #parse detections

6 with open(path + '/detections/' + file_name + '.txt') as file:

7 lines = file.readlines()

8 predicted_labels = [int(line.split("\n")[0].split(' ')[0]) for line in lines]

9 predicted_scores = [float(line.split("\n")[0].split(' ')[-1]) for line in lines]

10 predicted_bboxes = [list(map(lambda x: float(x), line.split("\n")[0].split(' ')[1:-1])) for line in lines]

11

12 #parse labels

13 with open(path + '/labels/' + file_name + '.txt') as file:

14 lines = file.readlines()

15 labels = [int(line.split("\n")[0].split(' ')[0]) for line in lines]

16 bboxes = [list(map(lambda x: float(x), line.split("\n")[0].split(' ')[1:])) for line in lines]

17

18 #append to the dataset

19 ds.append({

20 "images": deeplake.read(image_name),

21 "bbox": bboxes,

22 "labels": labels,

23 "predicted_bbox": predicted_bboxes,

24 "predicted_score": predicted_scores,

25 "predicted_labels": predicted_labels

26 })

27

28#if you want to visualize the dataset with Deep Lake, you can do so by running the following command

29ds.visualize()

30

We are now ready to pass the data to manot in order to detect outliers. In this use case, we will be observing the performance of YOLOv5s (small) on the VisDrone Dataset (click here to see how to upload data in YOLOv5 format to Deep Lake). For the initial setup, we will define the paths of the images, the ground truth data (labels) and the detections. The format of the metadata refers to the format of the labels (bounding boxes). In the example below we are using XYX2Y2. Lastly, we will provide the classes for our model. Note that the data can be stored either locally or on a service such as Amazon S3. Once you have completed the setup process, manot will return a specific id number for the setup. We will use this in the next step for retrieving insights from manot.

1 setup = manot.setup(

2 data_provider="deeplake",

3 arguments={

4 "name": "manot-activeloop",

5 "detections_metadata_format": "xyx2y2", # it must be one of "xyx2y2", "xywh", or "cxcywh"

6 "classes_txt_path": "",

7 "deeplake_token": deeplake_token,

8 "data_set": "manot/manot-activeloop-demo",

9 "detections_boxes_key": "predicted_bbox",

10 "detections_labels_key": "predicted_labels",

11 "detections_score_key": "predicted_score",

12 "ground_truths_boxes_key": "bbox",

13 "ground_truths_labels_key": "labels",

14 "classes": ["person"],

15 }

16 )

17

Next, let’s get insights from manot on where the model is performing poorly. We will call the insight method and pass a number of parameters to define the model, data stream, and dataset that we will be observing the model’s performance on. The setup_id refers to the id that was returned after initializing the setup method in the earlier step. Our data provider for this example is Activeloop’s Deep Lake, a data lake designed for deep learning applications. The computer vision model we will be using is YOLOv5s on the VisDrone Dataset, both which are defined using the weight_name and data_path parameters respectively.

1insight = manot.insight(

2 name='manot-activeloop',

3 setup_id=setup_info["id"],

4 data_path='activeloop/visdrone-det-val',

5 data_provider='deeplake',

6 deeplake_token=deeplake_token,

7)

8insight

9

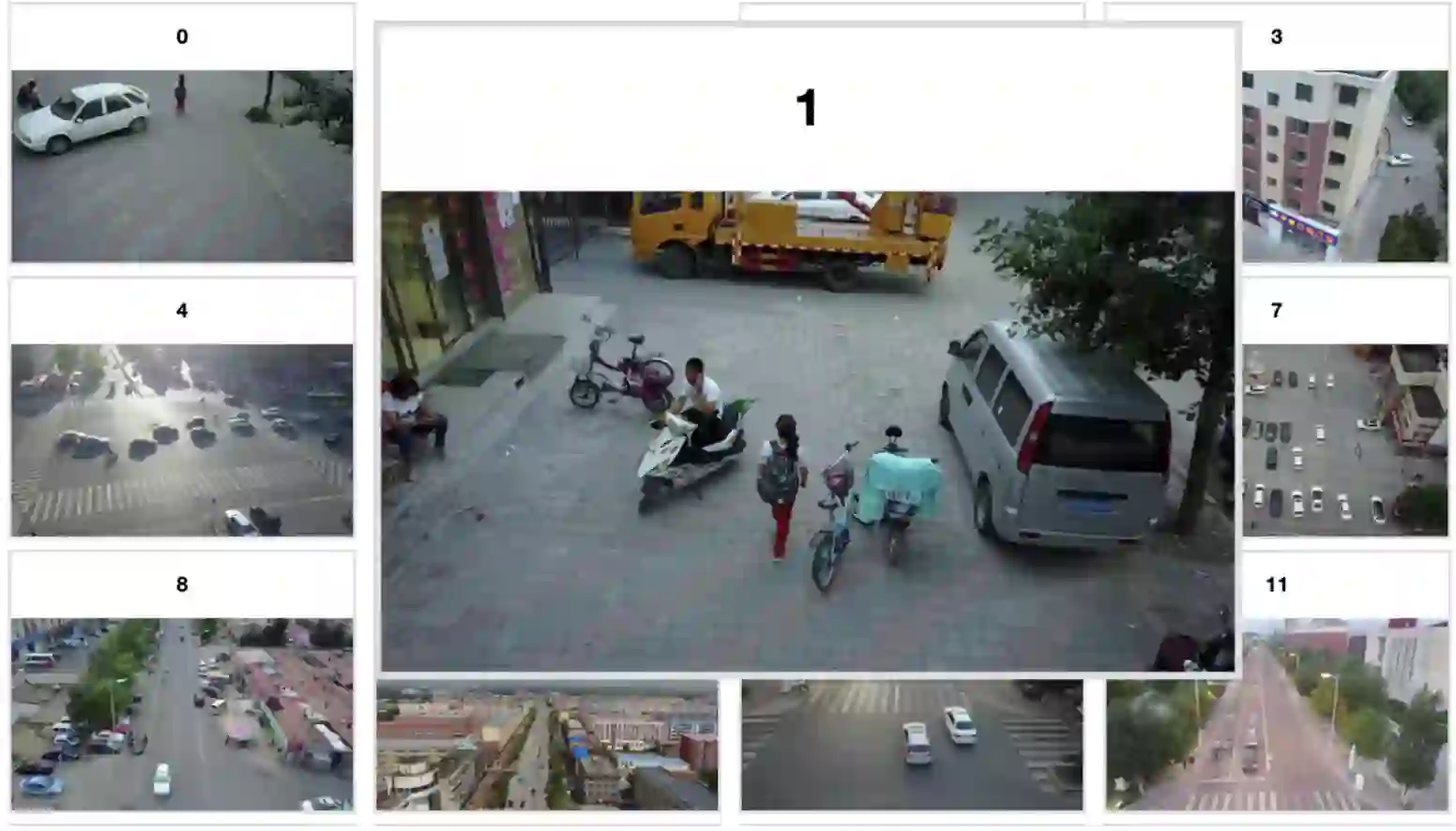

Once the data has completed processing, manot will return an id value for the insights, and as seen below, using the visualize_data_set method, we can see a grid of outliers that manot has identified as areas where the model will perform poorly. These are the insights that we can now extract, label and add to our training dataset in order to improve the performance of our model.

manot.visualize_data_set(insight_info["data_set"]["id"], deeplake_token)

You can run the Google Colab for Activeloop + manot yourself.