Akash Sharma, CEO of Vellum, and Sinan Ozdemir, Founder & CTO at LoopGenius, authored the following blog post. Their shared expertise has culminated in a blog post that explores Vellum’s Playground, a solution for finding the right prompt/model mix for one’s use case.

Introduction: Evaluating Large Language Models for Building AI-enabled Apps

Let’s skip the cliché opener about how AI is changing faster than ever and how Large Language Models (LLMs) are revolutionizing everything from building a chat with any data apps to medical imaging. It is, and they are. Instead, let’s dive headfirst into a common problem that anyone will have when working with LLMs for their project - how to pick the right LLM for the task at hand.

We’re going to compare four leading LLMs from three top AI companies. We aim to explore the LLMs’ strengths and weaknesses and provide a framework for comparing any models you choose for any task you want to perform. So, which models are we examining, and why have we chosen them?

OpenAI’s GPT-3.5: GPT-3.5 (today known as ChatGPT) is one of the first widely popular LLMs and is used both in a web form and API form by people all over the globe. We also want to illustrate the evolution within this series of models. By placing GPT-3.5 and its successor side by side, we aim to highlight the improvements and modifications made from one version to the next.

OpenAI’s GPT-4: OpenAI’s latest model, GPT-4, holds some mysteries, such as its actual size and the exact data used in its training. Nonetheless, it is widely recognized as one of the top-performing LLMs, if not the best.

Anthropic’s Claude: Claude AI is Google-funded Anthropic’s answer to ChatGPT by OpenAI. Claude stands out because of its unique training approach. It employs Reinforcement Learning from AI Feedback (RLAIF) instead of the more commonly used Reinforcement Learning from Human Feedback (RLHF). This divergence in training methodologies allowed Anthropic to train a model comparable to modern GPT models with fewer constraints. Their “Constitutional AI” approach is considered a first of its kind, allowing Claude to consider dynamic instruction and change its outputs to align with the task seamlessly. Although it didn’t impact our coverage of the model performance, we have to thank Claude’s team for granting Activeloop an early preview.

Cohere’s Command series of models: Cohere is making the cut because of their proven potential when well-guided through prompt engineering and cost-effective nature. Moreover, including Cohere allows us to discuss potential challenges users may encounter with specific LLMs.

When comparing any set of models, we also have to consider our task as that will influence how we judge the output of any LLM and therefore affects how we compare quality across models. We are going to walk through four examples:

Text Classification - Detecting offensive language: This use case involves evaluating the models’ performance detecting offensive language. It is a nuanced classification task where the models must identify offensive content without context.

Creative Content Generation with rules/personas: We will ask our models to write email responses while adhering to specific rules or personas. The goal is to see how well the models can follow instructions and adopt different voices or personas in their responses.

Question Answering and Logical Reasoning: The objective is to test the models’ mathematical and logical abilities. We will use examples from the Gsm8k dataset, which consists of math word problems, to evaluate the models’ performance in solving mathematical problems and understanding logical reasoning.

Code Generation: We’ll ask our Large Language Models to generate Python code snippets from English-language descriptions of programming tasks, testing their abilities to comprehend these instructions and accurately translate them into code. This task will challenge the models’ understanding of natural language and their grasp of programming language syntax and logic.

To provide a framework for comparing the performance of our LLMs on these different tasks, we will consider three main metrics for performance/quality:

Accuracy: This performance measure refers to how correctly a model responds to a given prompt. In the case of classification, it would be the percentage of instances where the model’s classification matches the ground truth. It is the ratio of problems correctly solved to the total number of problems for problem-solving. Accuracy is a measure of how often the model gets the correct answer.

Semantic Text Similarity: This considers the similarity in meaning between two pieces of text, regardless of the exact wording used. This metric is standard in use cases like content generation, where the goal isn’t necessarily an exact match to a predefined template but to generate text that captures the same concept, sentiment, or information as the ground truth. It’s a robust measure of how well a model understands and generates content that aligns with the intended message. In our case, semantic text similarity is measured as the cosine similarity between the embeddings produced by an LLM behind the scenes.

Robustness: This refers to a model’s resilience in the face of varying, unexpected, or challenging inputs. It measures how well the model performs when faced with unusual prompts, nuanced tasks, or adversarial scenarios. A model with high robustness should be able to handle a wide range of tasks and scenarios without a significant drop in performance.

It’s important to remember that each model has unique features and advantages, and the ‘best’ model will ultimately depend on your specific use case, test cases, budget, and performance requirements. The goal of this post is not to declare any of these models a “winner” but rather to help you think about judging the quality and performance of models in a more structured way using Vellum – a developer platform for building production LLM apps.

How to Interpret Results in Vellum’s Playground

We’ll be making all the prompt comparisons in Vellum's Playground. Vellum is a tool for deploying production-grade Large Language Model features, providing resources for prompt creation, semantic search, version management, testing, and monitoring. It’s compatible with all key LLM providers.

Before jumping into the evaluation details, this screenshot has a quick primer on interpreting the UI.

With this clear, let’s get into the first example!

Text Classification - Offensive Language Detection

Classification is the most apparent application of LLMs with prompts that are relatively easy to engineer. When working with classification, one straightforward metric for evaluating our LLMs is exact-match accuracy, representing the percentage of instances where the LLM’s classification matches the ground truth. However, classification isn’t always a simple task. The final label assigned to a text may require complex reasoning or be subjective. Consider the task of offensive language detection.

Offensive language detection aims to… well… detect offensive language. But this is subjective and frankly pretty nuanced, and humans will disagree about which pieces of text are offensive and which aren’t. All modern LLMs have at some point been exposed to raw human-generated data from the open/closed internet and feedback on output quality either explicitly with RLHF or implicitly with RLAIF. When humans are tasked with grading LLM outputs for offensive behavior, their biases will slip in, influencing the model. This isn’t necessarily detrimental, but its influence becomes evident rather quickly. Check out what happens when I ask Cohere and GPT-4 to classify a piece of text as offensive or non-offensive with no clear instruction on what I consider offensive or not:

Cohere and GPT-4, without instruction on what is offensive or non-offensive, have different opinions with a zero-shot classification of a nuanced and subjective classification task. Cohere gets the answer wrong (at least according to my ground truth label), whereas GPT-4 gets it right. So does that mean that GPT-4 is better at offensive language detection? We cannot state yes or no without doing at least some work to guide the LLMs on what we consider offensive.

If I tweak the prompt slightly to give it some guidelines on what I consider offensive or not, then Cohere suddenly is on the same page as me:

This modified classification prompt gives Cohere guidance on what I consider offensive, and then I can get my content about the Confederate flag labeled correctly.

There are many ways we can provide in-context learning to our LLMs, including adding clear rules and instructions as I have done, by including a few examples of offensive or non-offensive content, and by utilizing “chain of thought prompting” for the LLM to give its reasoning first.

Regarding robustness, when we attempted to create prompts designed to “trick” the LLM into outputting a particular category, we could sway the LLMs, as seen in the following figure. Still, it also tended to end up as a team discussion on what kinds of texts are offensive and whether we truly “tricked” the LLM or not depending on our definitions of the task, which speaks once more to the subjectivity of the task at hand.

When I told our LLMs that I am the survivor of a Confederate attack, I was able to inject an additional instruction into the LLM, and some of the models (Mainly Claude, GPT 3.5, and Cohere) were more likely to change their answer to be more inline with the inputted direction.

After all that, GPT-4 performed the best on this task regarding accuracy in our test cases.

Content Generation - Email Replies

Content generation is where models like GPT first gained significant attention with their seemingly effortless ability to quickly generate readable content, given a few rules and guidelines. Companies leverage LLMs to facilitate the content generation, from drafting marketing copy to summarizing meeting notes. Let’s take an example we can all relate to, replying to an email.

For example, you can provide prompts that specify a particular persona, voice, and prompt chain and observe how each model generates email replies or other types of content accordingly. The comparison can focus on the models’ ability to maintain consistency, creativity, and relevancy within the given constraints.

Another metric we can see here is latency - the model’s speed at processing a single input. GPT-4 is noticeably slower regarding longer content generation than the other models. We will touch on this a bit more in the next section.

Once GPT-4 had finished generating its answers, I was able to compare the outputs, but there lies another problem, I don’t want to read all four responses to the email for each of my test examples, and an exact match accuracy would be unfair because I want the LLMs to be creative but stick to a general gist therefore, I’ve chosen to use semantic similarity for my comparison. Simply put, I’m relying on yet another LLM behind the scenes at Vellum that compares our LLM outputs to a ground truth output based on semantic similarity - how similar they are from a conceptual perspective rather than an exact word-to-word match.

The semantic similarity measurement tool leverages natural language understanding to comprehend the underlying meaning and sentiment of the text instead of merely checking for identical wording. It can detect the key themes, significant details, and overall intent, even if the linguistic expression varies. If the output strays too far from the main idea, the score would be low, regardless of the originality or creativity of the phrasing. Conversely, the score would be high if the output accurately captures the essence of the ground truth, even if expressed uniquely.

GPT-4 (left) rambled on more than I wanted and was more formal than my ground truth reply. GPT-4 also included a subject line where it wasn’t necessary. At the same time, Claude (right) was closer to my sample email semantically but also included a bit of extraneous information at the top (the “Here’s a draft response”). We can attempt to prompt away the chatter/subject but choose not to, in this case, showcase how different LLMs decide to give different bits of extraneous information without explicit instruction.

Semantic similarity in its traditional sense is also a technique data scientists use to retrieve relevant documents, text, and images for comparison. Deep Lake’s cloud offering pairs with various providers, such as S3 and Snowflake, to make this search easy to understand and implement.

Semantic Similarity enables a fair evaluation of our LLMs’ outputs by focusing on the quality and appropriacy of the content rather than its conformity to a predefined template. It also encourages creativity within the boundaries of the topic, ensuring that the responses are accurate but also engaging and insightful.

It was fairly easy to trick the LLM into creating obscure content in this context for all models because all of these LLMs were specifically aligned to be helpful, so if we asked for odd things in the incoming email, the LLM addressed them with as much professionalism as possible. I receive emails about various things daily, so I’m more impressed than worried. If this were considered part of a production-ready application, I would strongly recommend adding input and output validation systems to catch these rather than rely on models to figure it out.

For context, Claude performed the best with our examples, only slightly beating out GPT-3.5. We could attribute part of Claude’s success to its fine-tuning process, as it was specifically designed to adapt its output based on dynamic instructions and guidance. This particular example has the most variables of any we’ve used, highlighting areas where Claude’s capabilities truly excel.

Latency and Cost in large Language Model Context

So far, we have primarily focused on the performance of LLM outputs in our tasks. Of course, there are other practical factors to consider when evaluating and comparing these models, particularly latency and cost. Let’s pause our task evaluation to consider these two critical considerations that can significantly impact the usability and feasibility of a model for certain applications.

Latency is a model’s time to respond to a prompt or execute a task. This is important because it affects user experience, especially in real-time or near real-time applications such as chatbots, digital assistants, and real-time content generation.

We’ve already noticed that GPT-4 tends to have a slower response time than the other models. It’s important to highlight that this latency is at the input/generated token level—meaning the time taken to generate each token — and therefore doesn’t primarily depend on the specific task. This means that whether the model is classifying text, generating the content, solving math problems, or producing code, its inherent latency (for the most part) remains consistent. So if speed is a critical factor for your application, this might be a significant deciding factor.

Cost is another crucial consideration. The cost of running an LLM is often measured in input and generated tokens, with the total cost being dependent on the number of tokens processed. Tokens are essentially chunks of text that the model reads or generates. A token could be as short as one character or as long as one word. For English text, a token is typically a single word or part of a word.

Again, this cost is primarily a factor of the token count, not the nature of the task. So whether the model generates an email reply, detects an offensive language, or solves a math problem, the cost will depend on the number of tokens it processes and generates.

How to Balance Performance, Latency, and Cost for Large Language Models?

Balancing performance against practical considerations like latency and cost is essential when selecting an LLM. A model that excels in accuracy or semantic similarity but takes too long to generate responses might not be suitable for time-sensitive applications. Similarly, a fast and affordable model that doesn’t meet your accuracy needs might not be the best choice for your use case.

Another key factor contributing to LLM costs is the number of tokens fed into the model. One way to reduce this overhead is by only using documents or parts of the text that are relevant to the task at hand. To do this, Deep Lake can store and retrieve your text and embeddings in the same table, providing a quick and easy solution for developers to reduce spending on LLM applications.

It should come as no surprise that the key is understanding your specific needs, constraints, and budget and choosing a model that best balances your situation. Maybe you’re ok with a 5% hit on accuracy if the model is 10x cheaper and 5x faster if you have sufficient error handling/support services built into the product. By understanding these aspects, you’ll be better equipped to choose the most suitable LLM for your tasks and ensure the success of your AI-driven applications. Back to the tasks!

Question Answering with Logical Reasoning

While classification and content generation are more “useful” day-to-day applications of large language models, many enthusiasts and researchers, such as myself, are more intrigued by an LLM’s ability to break down and reason through a problem. An LLM’s capability isn’t just about finding the right answer to a simple math problem—it’s about demonstrating an understanding of the problem-solving process itself.

We’ll use a “chain of thought” prompting technique to assess this. This involves asking the model to solve the problem and explain its reasoning step-by-step, as a human might. This approach nudges the LLM into producing a ‘chain of thought’ that showcases its grasp of the problem-solving steps.

Let’s take an example word problem from the dataset: “Kendra has three more than twice as many berries as Sam. Sam has half as many berries as Martha. If Martha has 40 berries, how many berries does Kendra have?” The correct answer, 43, is relatively straightforward to compute. But how does the LLM get there? A desirable response would be something like this:

- Martha has 40 berries

- Sam has half as many berries as Martha. Half of 40 is 20. So Sam has 20 berries.

- Kendra has 3 more than twice as many berries as Sam.

- Twice 20 is 40. 40 + 3 is 43.

- So Kendra has 43 berries

This unveils the correct answer and reveals a logical journey toward it.

We present our models with a spectrum of math problems, from elementary arithmetic to intricate algebra and calculus. We assess their responses for accuracy and the depth and clarity of their problem-solving pathway. How well do they demonstrate an understanding of mathematical concepts and processes? Can they explain their reasoning clearly and logically? These are the questions we seek to answer in our comparison of LLMs.

Below is a sample of results from our four models showing that 3/4 of our models seem to have the prerequisite knowledge to solve many of these questions, with Cohere being a bit lagging in quality. Because we are asking for a chain-of-thought response that doesn’t technically matter as much as the final answer, we can use a regex match in the Vellum Sandbox to find the answer at the end in the defined answer format (put in the prompt). In this instance, I used “Answer: {{answer}}” as the regex pattern to look for.

GPT-4, Claude, and GPT-3.5 (left, second from the left, and third from the left, respectively) all go about explaining the solution steps differently but ultimately get the answer right. Cohere (right) struggled to get the answer right and put it in the defined format in the prompt. It was noticeable once again how much slower GPT-4 is compared to the other models, so in this case, even though it ended up benign, the most performant. Considering the speed, I might lean towards GPT-3.5 with some additional prompting.

Content generation is a task that benefits from having source data from which to tailor a model’s output. It is also typically quite difficult to feed in context without driving up the cost of the model and sometimes even the quality, as not all contexts may be useful. For this reason, we recommend exploring dedicated databases you can use to accurately retrieve the right information for the task at hand. One such offering is Activeloop’s Deep Lake, which allows you to store and retrieve text, images, and their embeddings all in the same table.

Considering robustness in the specific case of math/logical reasoning might look like asking the LLM to solve a problem rather oddly, as seen in the following figure.

Constitutional AI models like Anthropic’s Claude (right) can be more likely to follow dynamic instructions to a fault. In this case, I asked it to limit reasoning to a single line in Turkish, and Claude followed my instruction and then got the answer wrong. On the other hand, OpenAI’s GPT-4 (left) blatantly ignored the instruction of only offering a single line of reasoning to get the answer right, and its Turkish isn’t half bad either.

For context, GPT-4 won this one out of the water, with Claude and GPT 3.5 being a relatively close second on a sample of GSM 8K, but again GPT-4 was easily 4-5x slower than its colleagues in generating results. It’s known that GPT-4 and GPT-3.5 were graded on their ability to reason through problems with human feedback, so it’s no surprise that one of these models excelled in our chain of thought example.

Code Generation

Understanding codebases with LLMs or generating novel programming code using natural language descriptions has become quite an intriguing subject. So, to test the capabilities of our LLMs, we tasked them with generating Python code snippets from English-language descriptions of programming tasks.

Our LLMs will be provided with tasks in English, describing what a particular piece of Python code should do. For example, “Create a Python function that calculates the factorial of a given number.” The objective is to evaluate how well each model can comprehend these instructions and translate them into accurate, executable Python code.

Again, while the exact-match accuracy metric works well for classification tasks, evaluating code generation presents unique challenges. Here, we aren’t looking for word-for-word matches but for functionally correct code. Even if the code produced by the model doesn’t match our reference code exactly, it’s considered correct if it satisfies the task requirements and works as expected. For this reason, we will use a custom webhook solution with Vellum with a server we built to compile and run code against some unit tests. Notably, we could also evaluate the output on style and code requirements (typing, adherence to PEP-8, etc.) which our webhook could perform.

Interestingly, the code snippets generated by the models often took different approaches to solve the same task, showcasing their unique problem-solving abilities. While this code generation comparison is far from exhaustive and doesn’t cover all aspects of software development, it provides an exciting look at how Large Language Models can contribute to this field, assisting software developers with tasks ranging from debugging to coding assistance.

On more than one occasion, with our temperature turned up (leading to more diverse outputs over several runs), the LLMs would sometimes generate buggy code even with the same model/prompt. This is not unexpected; it shows that a great prompt and LLM are rarely enough. We need to consider other levers at our disposal in future posts.

With our examples, GPT-4 was the best performer and GPT 3.5 followed closely behind, and Cohere and Claude were better suited for more straightforward tasks. Remember, the success of a model depends on the nature and complexity of the tasks it’s assigned, and it’s always recommended to test the models in the context of your specific use case to determine the best fit.

Code generation typically works best with an existing codebase on which to draw, such as a project or company’s repository. Unfortunately, many LLM context windows are too small to accommodate a complete project, which is where Deep Lake comes in. By storing an indexed version of the code, LangChain, Deep Lake, and any LLM you choose can be used together to enable more accurate completions that can reduce development time and speed up the iterative design process.

Concluding Remarks: No ‘One Size Fits All’ LLMs for Now

In our quest to understand the performances of various LLMs in the context of various real-world applications, our goal was to underscore the importance of considering the performance metrics relevant to each use case and the unique attributes of the LLMs, including their respective training methodologies and characteristics.

Offensive Language Detection proved to be a nuanced task, highlighting the influences of subjectivity and inherent biases on the models. While GPT-4 performed best on this task, the experiment emphasized the importance of providing clear guidelines to LLMs to refine their understanding of offensive content.

The semantic similarity was crucial in evaluating the generated content regarding email replies. Claude excelled in this area, marginally outperforming GPT-3.5, by providing responses that adhered closely to the semantic structure of the sample emails, most likely due to its nature of taking in rules (constitution) and adjusting its output as needed. This test also exposed GPT-4’s slower processing speed, which may be a significant consideration for applications requiring faster response times.

In the Mathematical Problem-Solving task, GPT-4 demonstrated superior performance, albeit at a slower speed than the others. The experiment underscored the LLM’s potential in understanding problem-solving processes and generating a coherent ‘chain of thought,’ though Cohere struggled a bit in this area.

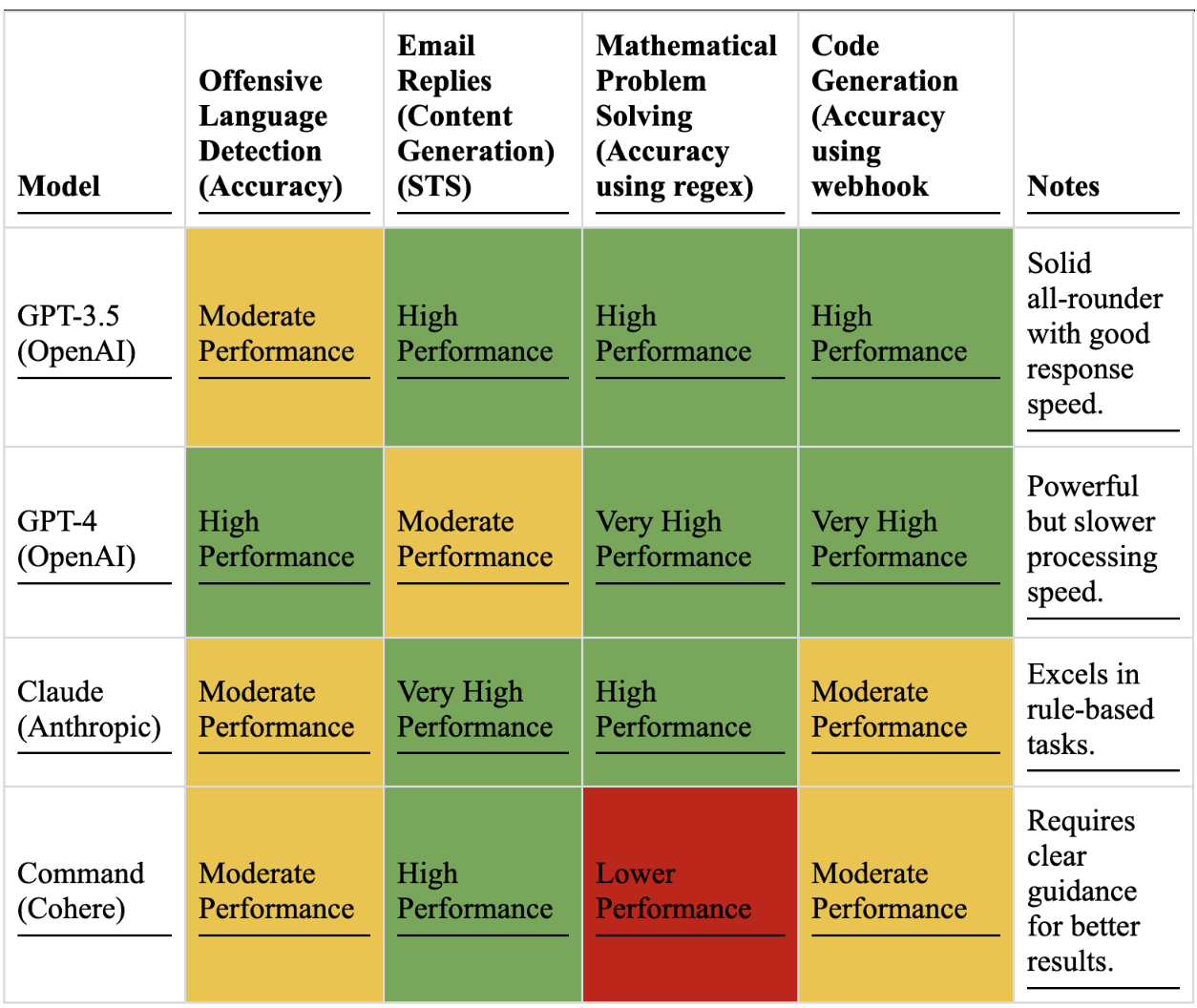

This table gives a snapshot of our LLMs’ performance across the tasks. Each task’s performance is rated based on the combined evaluation of the specific metric applied (accuracy, semantic similarity, logical reasoning + regex match) and the inherent characteristics of the LLM (response speed, ability to follow instructions, creativity).

Ultimately, the key takeaway from our comparative study is clearly understanding your needs and how different models can best meet those requirements. As we’ve seen, each model has strengths and nuances that can be effectively leveraged if appropriately understood. As the dynamic landscape of AI continues to evolve, mastering the ability to discern and compare the capabilities of different LLMs given specific tasks will become a vital skill for navigating this exciting field.

If you see yourself trying to figure out which model to use for which task and would like to use Vellum to experiment with prompts and compare one modal to another, you can sign up here. For any feedback or questions about Vellum, please contact Akash at akash at vellum.ai.

Large Language Model FAQs

What are Large Language Models (LLMs)?

Large language models are trained on vast amounts of text data. They are models capable of providing output across varioous tasks such as natural language generation, classification, translation, and more. Examples include GPT-4 by OpenAI, or Claude by Anthropic.

How do Large Language Models work?

LLMs use self-attention and artificial neural networks to analyze large datasets, learn language patterns, and generate new text. They develop an understanding of semantics, context, and syntax to complete language tasks. Performance depends on size of datasets, computing power, and model architecture.

What is Anthropic Claude?

Anthropic’s LLM, trained using Constitutional AI. It can adapt responses based on feedback and guidelines. Performed well on email reply generation.

What is Constitutional AI?

An approach used to train Claude, Anthropic’s LLM. It employs Reinforcement Learning from AI Feedback instead of human feedback. This allows Claude to consider dynamic instruction and adjust outputs accordingly.

What is Vellum?

Vellum is a developer platform for building and deploying production LLM applications through simultaneously evaluating various LLMs’ performance across the same set of tasks. Used in this study to compare LLM responses to prompts. Provides metrics like semantic similarity to evaluate generated content.

What metrics were used to evaluate the LLMs?

Several metrics that can be used to evaluate Large Language Models. These include accuracy (percentage of correct classifications or solutions), semantic text similarity (meaning-based comparison of content), robustness: Performance on varying, challenging inputs, latency (response time) and cost (based on number of tokens processed).

What are some limitations of current LLMs?

Small context windows limit tasks like code generation. Subjective tasks are influenced by inherent biases. Models can generate “buggy” outputs. More data and compute could continue improving performance.

What are promising future directions for LLMs?

Applications in various domains like healthcare, business, and education. Techniques like Constitutional AI for refinement. Integrating multiple models. Continued progress in model capabilities and mitigating limitations.

What advice is offered for developing LLM applications?

Understand your needs, constraints and budget. Test models in your context. Consider metrics beyond accuracy, e.g. speed and cost. Provide guidelines and feedback to improve performance. Use tools to overcome limitations. Choose models balancing performance, latency, and cost.

What is the difference between OpenAI’s GPT-4 and GPT-3.5?

GPT-4 is OpenAI’s latest, most advanced model, while GPT-3.5 (ChatGPT) is an older version. GPT-4 has greater parameters, training data, and compute, leading to better performance on complex tasks. However, GPT-4 also has higher latency (slower response time) and cost. GPT-3.5 has broader use but more limited capabilities.

How does OpenAI’s GPT-4 compare to GPT-3.5?

GPT-4 performed best in offensive language detection, math problem-solving, and code generation but was slower and more expensive. GPT-3.5 performed second-best in email reply generation and math reasoning, with faster responses but more limited abilities. Overall, GPT-4 excelled at complex, nuanced tasks but at the cost of speed and expense. GPT-3.5 provides a more balanced capability and performance.